Designing for imperfect scenarios (Design for Error – Part 5)

- Mind Brunch

- Sep 20, 2018

- 5 min read

Engineers develop products under the assumption that not much would go wrong while operating the said device. Except, it happens, a lot, in the real world. How then, can products be designed to work flawlessly in a flawed environment?

Consider yourself inquiring about a product with a sales representative. What are the questions that each of you will ask the other? Are they straightforward and simple? Are the questions few or more? Will you be satisfied when a couple of your questions are answered? No, you’ll probe further until all your doubts are cleared and you have a good understanding of the product. Right? Then how do we expect those products that are no way near to us in sentient thinking, to understand our inputs as being correct or otherwise?

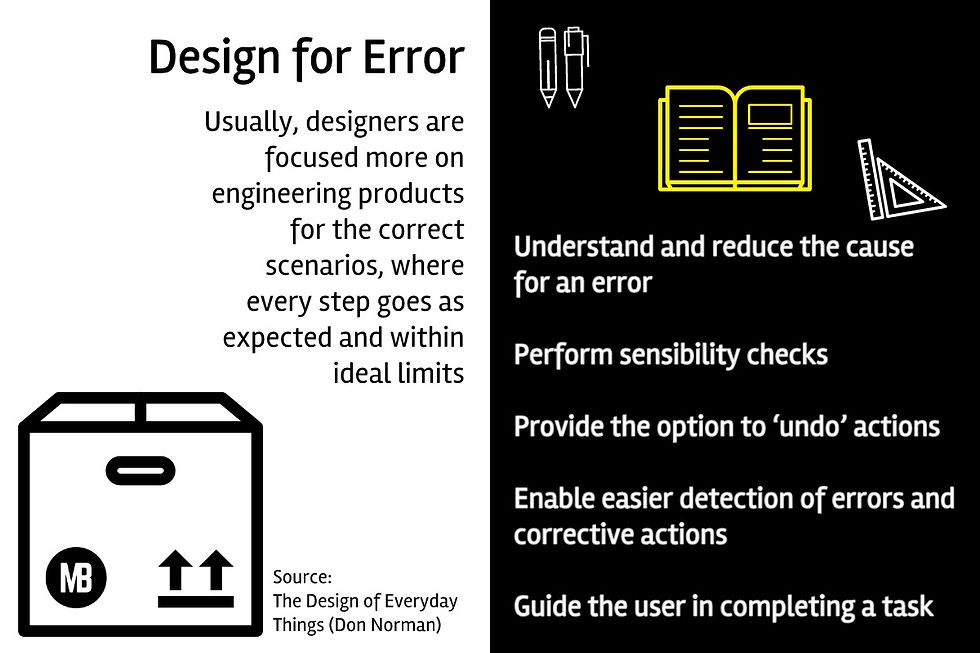

In simple words, machines and other everyday products aren’t smart enough to know whether an input is correct or the motive behind any inputs. In such an environment, designers are focused more on engineering products for the correct scenarios, where every step goes as expected and within ideal limits. What about those times when things take a turn for the worse? Sadly, many of the devices aren’t designed to handle errors, whether conscious or passive. Imagine the horror a patient has to go through if the nurse or any other medical professional unknowingly keys in a number too big for normal dosage in say, an X-ray machine. We have read about instances where a minute error while typing on the keyboard has wiped huge amounts off of bank accounts. The main reason behind all of these is it is easier for an error to occur than it is to realize and correct the same.

Don Norman, in his book “The Design of Everyday Things” provides the following list of useful cues:

Understand the cause of an error and work towards reducing that cause

Carry out sensibility checks to determine if the design passes a common-sense threshold

Provide the user to ‘undo’ actions, otherwise make those actions culpable to go wrong a little tougher to execute

Easier detection of errors and corrective actions

Instead of slotting as errors, it helps to view actions as approximations of what the user seeks to achieve. Hence, it is important to guide the user in completing a task

Show the information that matters

Mistakes arise when incomplete or unimportant information is provided by the system. This happens since the information that is provided as a feedback may not be helpful in completing a task correctly. Interruptions are also one of the main culprits that contribute to errors. When a worker resumes after the said interruption, it is critical to remember the present state of the system or the step that was previously completed. Instead of addressing the need for such useful information, many products are designed to provide minute information that isn’t necessary beyond short-term memory. The airline industry has come up with a novel idea to deal with interruptions.

“Sterile Cockpit Configuration” is a term that is followed in certain phases of airline travel wherein the pilots aren’t allowed to discuss anything other than that concerning the flight controls. Such practices can reduce mental slips and other mistakes in instances like driving, whereas a particular junction is approaching, the conversation is halted to allow the driver to focus completely on the route and other tasks like switching on the turn lights.

Another effective way to counter errors is to limit the number of warning messages that are communicated to the user. Oftentimes, such messages indicate minute variations that aren’t critical to the smooth operation of the system. It is thus advisable to save the critical messages and alarms for those instances when the user’s attention is paramount. It also prevents complacency on the part of the user. In fact, smart and effective usage of speech warning signals can help prevention of errors to a great extent.

Constrain occurrence of errors

Research on errors has thrown light on areas which can be worked on to prevent errors. One of them is to build a system that makes it difficult to err. Consider the presence of multiple reservoirs in a vehicle. Each of them is dedicated to accepting a particular type of fluid/oil. How do we prevent people from mixing up fluids and pouring the wrong oil in a reservoir? Design of reservoir mouths is varied to match the respective fluid container to indicate a match. Along with this, unique colors for each of the oils might also serve as indicators. These are similar in approach to poka-yoke.

Do you wish to return or continue?

As discussed earlier, the option to ‘Undo’ a step helps immensely in cutting down on errors. Although many of the said options are available for the task just completed, providing multi-level Undo options are found to be optimal. Be it the time this blog is being typed or the numerous occasions when we have misspelled words, deleting and retyping a sentence have ensured that we can trust the computer to help us backtrack on those errors and correct the same. However, sometimes, we find ourselves in the midst of a scenario where we end up confirming a step that we didn’t intend to. Why does this happen? Just like warning signals, a plethora of confirmation messages might nudge a user to care less, which can lead to an occasional mistake of confirming a wrong action. A key takeaway from this section is to provide a combination of reversing errors as well as providing unique confirmation messages, for example by highlighting the window that is to be closed (assuming you have a ton of them open on your screen).

Sensibility Checks

Imagine yourself in the following situation. You are about to transfer 1,000 units of your local currency through net-banking. You have approximately 5,000 in your account. Mistakenly you type in 10,000 and hit send. The system only asks you if you want to complete the transaction. You press continue and it’s done. It won’t be too late before you realize the consequences. Maybe the bank allows for credit, maybe the transaction will bounce leaving you in a soup. Don’t you wish the system automatically splashed a message that the said amount if more than what you have and informs you about the outcomes? That is exactly what a sensibility check is all about. Systems checking whether a particular input is sensible enough to carry out. Previously, while discussing the immense pressure of work and its effects on errors, we briefly discussed x-ray machines and wrong inputs. Such checks will work wonders in many industries including the medical field.

Making it less slippery

Slips occur due to mental tasks were taken over by automatic systems that arise due to one’s familiarity with a particular action. That we all know, by now. This can be reduced by providing feedback that is hard to miss. The same can be amplified by providing feedbacks about individual tasks in their own unique manner. This helps to get the attention of the user to a said task, especially while multitasking. An example is a machine-readable code that accompanies the doctor’s prescriptions. Provided how famous doctors are with the legibility of their writing, an electronic code for a medicine will reduce errors on the side of the pharmacist. The same can be extended to nurses who attend to numerous patients every day so that they administer the right amount of the correct medicine to the appropriate patient at the right time.

A more common scenario in factories and large machines is the similarity of switches and buttons. They all look the same! Wouldn’t it be nice to design them differently so that even with automatic mental modes taking over, the probability of errors is still reduced?

Notes

This series is a summary of Chapter 5 (Human Error? No, it’s Bad Design) from the book ‘The Design of Everyday Things’ by Don Norman

You can also listen to this insightful podcast by NPR Hidden Brain to understand how our minds work under stress – https://n.pr/2KtFwLB

Comments